Master RemoteIoT Batch Job Processing: Examples & Best Practices

In an era defined by the explosive growth of the Internet of Things (IoT), can organizations truly afford to overlook the potential of optimized data management strategies? Efficient data handling is no longer a luxury; it's a necessity.

The relentless proliferation of IoT devices has ushered in an unprecedented deluge of information. This avalanche of data, streaming from myriad sources, demands robust processing capabilities. Batch processing has emerged as an essential tool for businesses navigating this complex landscape. RemoteIoT batch job solutions provide the means to manage IoT data with precision, efficiency, and the scalability needed to thrive in today's data-driven environment.

| Key Topic | Details |

| Definition | RemoteIoT batch job processing systematically handles extensive datasets collected from IoT devices, gathering data over a defined period and processing it in chunks. |

| Purpose | To optimize IoT data management strategies, enhance operational efficiency, and uncover valuable insights from massive datasets. |

| Advantages | Cost-effectiveness (reduces real-time infrastructure needs), improved accuracy (enables thorough data validation), and scalability (adapts to business growth). |

| Workflow | Data collection from IoT devices, storage in a centralized database, processing via algorithms or scripts, and report/insight generation. |

| Applications | Environmental monitoring (air quality), predictive maintenance (manufacturing), and various data analysis tasks. |

| Tools & Technologies | AWS Batch, Apache Spark, Google Cloud Dataflow, and others. |

| Best Practices | Define clear objectives, optimize data collection, monitor performance, and address common challenges like data overload and resource constraints. |

| Security Considerations | Encrypt data in transit and at rest, implement role-based access control (RBAC) to protect data integrity. |

| Future Trends | Edge computing for reduced latency, AI integration for deeper insights, and continuous evolution driven by technological advancements. |

This is a landscape analysis of RemoteIoT batch job examples, offering practical insights and actionable advice tailored for developers and decision-makers alike. This is your comprehensive guide to everything you need to know about RemoteIoT batch job examples, from foundational concepts to advanced implementations. Whether you are a developer seeking to integrate batch processing into your IoT projects or a business leader eager to understand its advantages, this article is designed to provide you with thorough and actionable information.

- Taylor Swifts Dance A Deep Dive Into Her Iconic Performances

- Flock Boats Your Guide To Innovation Safety Amp The Open Water

Understanding the mechanics of RemoteIoT batch processing is crucial to appreciating its potential. Unlike real-time processing, which demands immediate analysis, batch processing operates on a more measured timescale. It gathers data over a predetermined timeframe and processes it in manageable blocks. This approach is particularly advantageous when dealing with vast data volumes where instantaneous analysis isn't critical. The benefits are numerous: cost-effectiveness stems from the reduced need for expensive real-time infrastructure; improved accuracy results from the opportunity for thorough data validation and error checking; and scalability ensures adaptability as the volume of data grows. Within the context of RemoteIoT, batch jobs can be meticulously customized to align with specific business requirements, facilitating data aggregation, insightful analytics, and clear, concise reporting.

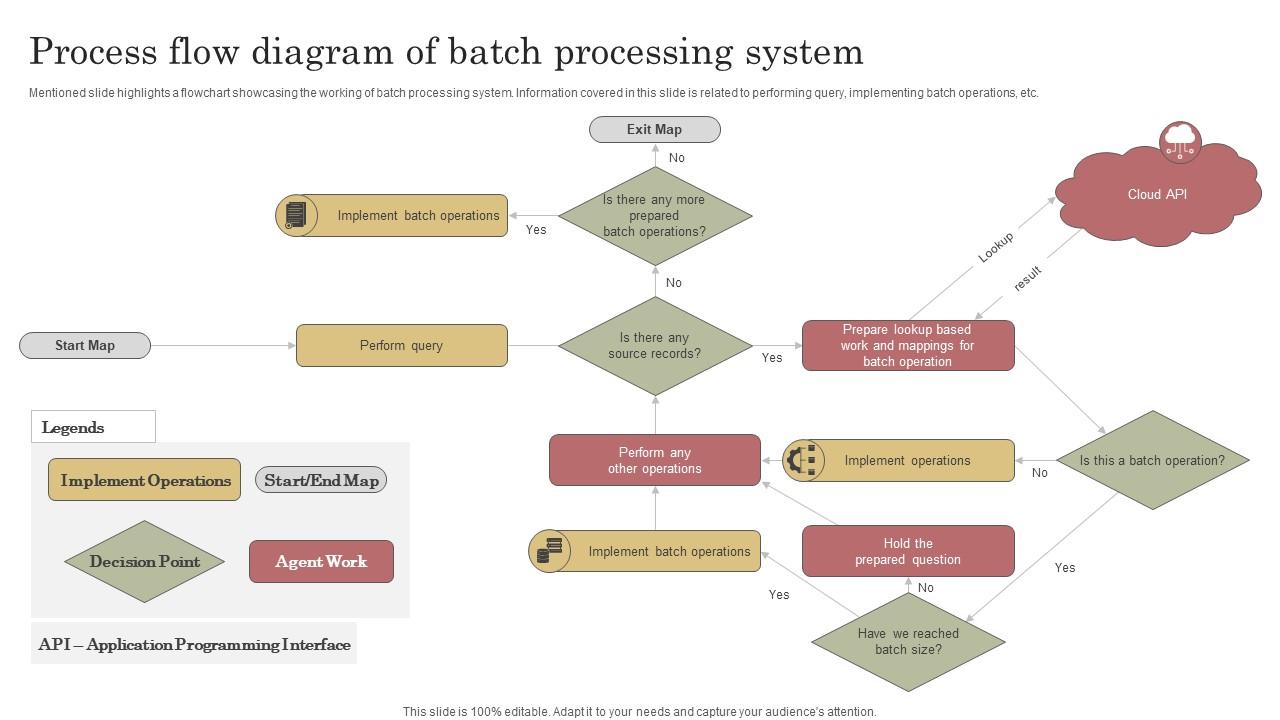

The operational framework of RemoteIoT batch jobs follows a structured, methodical approach. It begins with the meticulous collection of data directly from the IoT devices. This data is then securely stored within a centralized database or on a cloud platform. Once collected, the data undergoes processing, utilizing predefined algorithms or custom scripts. Finally, the processed information is translated into actionable reports or insightful analyses, supporting informed decision-making. This systematic process is designed to minimize errors and maximize efficiency, ensuring that data is handled effectively and reliably.

The advantages of implementing RemoteIoT batch job processing are substantial for businesses operating within the dynamic IoT domain. The core benefits are numerous and provide a compelling rationale for implementation:

- Dti Jewelry Overload Your Ultimate Guide To Smart Buys

- Crissy Henderson From Humble Beginnings To Hollywood Star

Cost efficiency is a primary driver. Batch processing inherently reduces the reliance on real-time infrastructure, which can be a significant expense. By processing data in batches, organizations can optimize resource allocation, leading to considerable cost savings. This is particularly appealing for businesses looking to streamline their operations and maximize their return on investment.

Improved accuracy is another crucial advantage. Batch jobs provide the opportunity for thorough data validation and error checking. This is especially critical when dealing with the massive datasets generated by IoT devices, where even minor errors can have significant repercussions. Batch processing helps ensure data integrity, leading to more reliable insights.

Scalability is a paramount consideration in the world of IoT. RemoteIoT batch processing solutions are inherently scalable, making them suitable for businesses of all sizes. Whether you're managing a small network of IoT devices or a large-scale deployment, batch processing can adapt seamlessly to your needs. This ensures that your data processing capabilities can grow alongside your business.

One of the most prevalent and illustrative applications of RemoteIoT batch job processing lies in the realm of environmental monitoring. Consider the deployment of IoT sensors within natural habitats. These sensors are capable of collecting an enormous amount of data related to temperature, humidity, air quality, and a host of other environmental parameters. This data, when processed in batches, provides invaluable insights for researchers, policymakers, and environmental agencies.

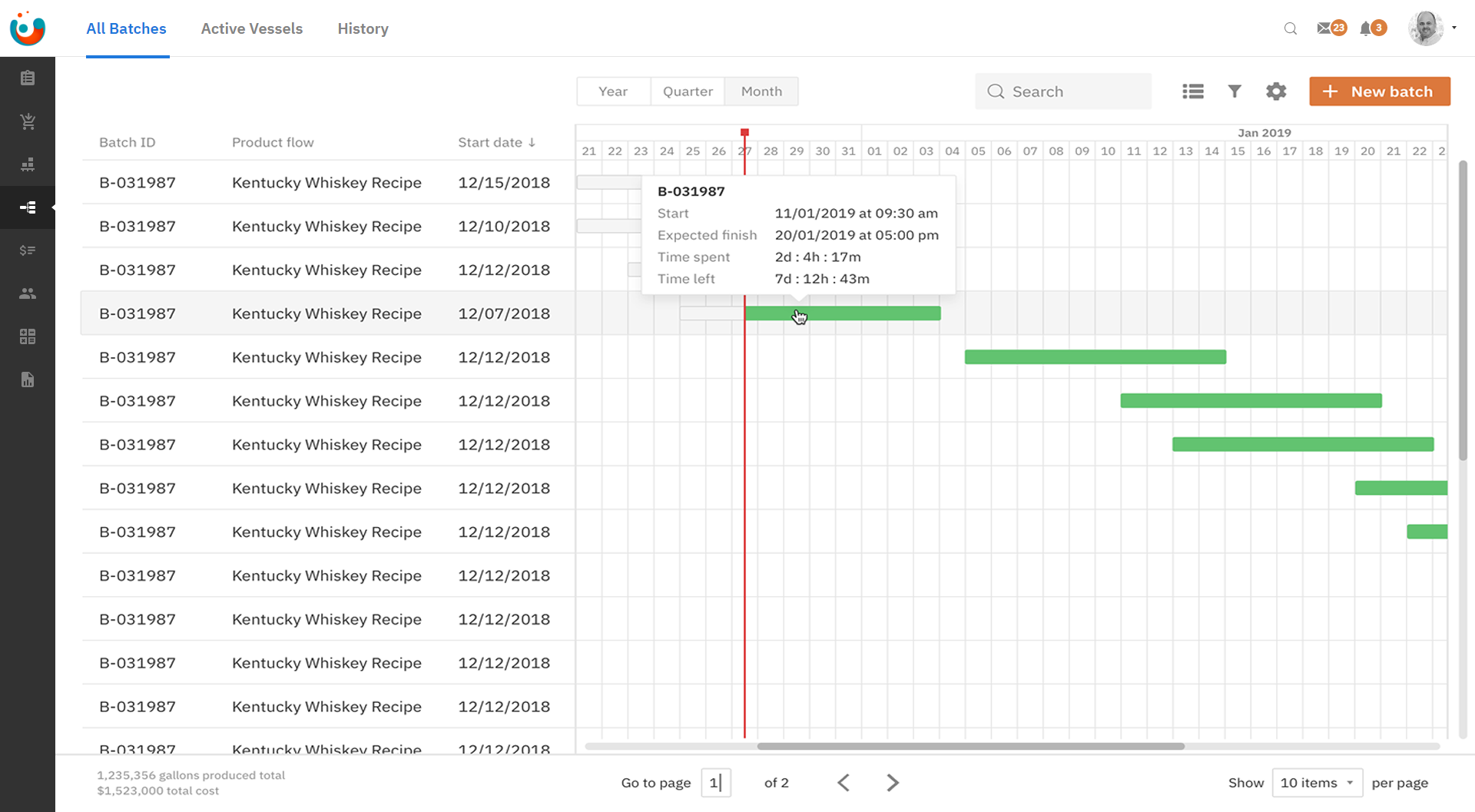

Take the case of a city government leveraging IoT sensors to monitor air quality throughout its various locations. These sensors diligently gather data, which is then processed in batches. This processing yields daily, weekly, and monthly reports. These reports are crucial for assisting policymakers in making informed decisions regarding pollution control measures. The process is broken down into easily understood steps. First, IoT sensors collect air quality data at frequent intervals, such as every hour. This data is then securely stored in a cloud-based database. At the end of each day, a batch job processes the accumulated data. The processed data then forms the basis for reports, which are carefully generated and distributed to the relevant stakeholders.

Beyond environmental monitoring, RemoteIoT batch job processing is also extensively used in predictive maintenance applications. The ability to analyze data from IoT-enabled machinery allows businesses to detect potential issues before they result in costly downtime. This proactive approach is a key differentiator in the modern manufacturing landscape.

To implement predictive maintenance using RemoteIoT batch jobs, a series of straightforward steps can be followed. The initial step involves the strategic deployment of IoT sensors on critical machinery. Next, operational data is collected over an extended period. This data is then processed in batches, with the primary goal of identifying patterns and anomalies that might indicate impending problems. Finally, alerts are generated to flag potential maintenance requirements, enabling proactive interventions. This approach not only enhances machine reliability but also dramatically reduces maintenance costs by enabling proactive interventions.

A variety of tools and technologies are available to facilitate RemoteIoT batch job processing. The selection of the most appropriate tools often depends on the specific needs and requirements of the project. Three popular options are:

AWS Batch. AWS Batch is a fully managed service designed to simplify the execution of batch computing workloads within the cloud. Its seamless integration with various IoT platforms makes it an ideal choice for RemoteIoT batch job processing, offering a user-friendly environment for managing and scaling batch jobs.

Apache Spark. Apache Spark is a powerful open-source framework, tailor-made for large-scale data processing. Its robust capabilities for handling complex computations make it a popular choice for demanding IoT batch jobs.

Google Cloud Dataflow. Google Cloud Dataflow provides a unified platform for both batch and streaming data processing. This versatile platform offers robust scalability and flexibility, making it a suitable option for a wide array of RemoteIoT applications.

The successful implementation of RemoteIoT batch job processing necessitates the adherence to a set of best practices. These practices are crucial for ensuring efficiency, accuracy, and the overall effectiveness of the system. Some key recommendations include:

Defining clear objectives is a crucial first step. Before embarking on the implementation of a batch job, it is essential to clearly define the objectives and the expected outcomes. This clarity will guide the design and implementation process effectively, ensuring that the system aligns with the desired goals.

Optimizing data collection is another critical element. The configuration of IoT devices should be carefully considered, ensuring that they collect only the necessary data. This approach reduces storage requirements and significantly improves the overall processing efficiency of the batch jobs.

Regularly monitoring performance is also essential. Continuous monitoring of the performance of the batch jobs is crucial for identifying bottlenecks and pinpointing areas for improvement. Utilizing analytics tools can provide valuable insights into job execution and resource utilization.

While RemoteIoT batch job processing offers numerous benefits, it can also present challenges. Addressing these challenges proactively is vital for a successful implementation. Some common issues and their solutions include:

Data overload is a common issue. To address this, implementing data filtering and compression techniques can significantly reduce the volume of data that is processed in each batch. This will improve processing efficiency and reduce resource consumption.

Resource constraints can also pose a challenge. To overcome this, it's recommended to optimize resource allocation by scheduling batch jobs during off-peak hours. Leveraging cloud-based solutions can also provide the scalability needed to handle the demands of the workload.

As the scale of your IoT deployment grows, ensuring the scalability of your batch job processing solution becomes essential. Several considerations are paramount:

Cloud-based solutions provide an ideal foundation for scalability. Platforms like AWS, Google Cloud, and Microsoft Azure offer highly scalable infrastructure tailored for batch processing. These platforms enable the easy adjustment of resources based on fluctuating demand. This is crucial for accommodating growth and managing peak workloads.

Adopting a microservices architecture can also significantly enhance scalability. Breaking down batch processing tasks into smaller, independent components allows for independent scaling of each service. This approach ensures that the system can adapt to the needs of the workload.

Data security is a paramount concern in RemoteIoT batch job processing. Maintaining the integrity and confidentiality of data requires adherence to stringent security best practices. The following are essential:

Data encryption, both in transit and at rest, is non-negotiable. All data should be encrypted during both transmission and storage, safeguarding against unauthorized access and ensuring data confidentiality.

Implement role-based access control (RBAC). Restrict access to batch job processing systems to authorized personnel only. Using RBAC ensures that only those with the proper permissions can access sensitive data and system functionalities.

The landscape of IoT batch processing is in constant flux, driven by technological advancements and changing business needs. Remaining informed about the emerging trends is crucial for staying ahead of the curve. Some of the key trends include:

Edge computing is gaining increasing traction as a means of reducing latency and improving overall processing efficiency. By performing batch jobs at the edge of the network, organizations can process data closer to its source, reducing the reliance on cloud-based solutions. This is particularly relevant for time-sensitive applications.

Artificial intelligence (AI) integration is becoming more prevalent. AI-powered batch processing solutions are emerging, enabling businesses to extract deeper insights from their IoT data. This includes machine learning algorithms for predictive analytics, anomaly detection, and pattern recognition.

- Unveiling Lot Lizards A Guide To These Amazing Reptiles

- Miss Bloomie The Rising Star Of Creativity Explore Her Journey

Batch Flow — Best Example By ERP Information Medium, 57 OFF

Batch Job not working properly V1 Bugs found on Windows Affinity

Batch Manufacturing Software OnBatch OnBatch